Overview #

I wrote this guide to provide newcomers to AWS with an overview of AWS networking. It covers the following terms:

- VPC

- Regions

- Subnets

- Availability Zones

- Route Tables

- Gateways

- Elastic Network Interfaces

- Load Balancers

- Security Groups

- Bastions or Jump Hosts

- VPC Peering

- VPC Privatelink

Back In The Day #

In the early days of AWS, we used what is now known as EC2-Classic. Everything was provisioned in a single, flat network that is shared with all AWS customers. This came before Virtual Private Clouds (VPC).

If you are reading my guide: ignore any documentation or article that mentions EC2-Classic. That has been deprecated. Nobody reading this should need to know anything about EC2-Classic. (Trust me: you lucked out.)

AWS Accounts and VPCs #

We start with an AWS Account.

An AWS Account has many Virtual Private Clouds. When you say it out like that, it does not make sense, because AWS is the cloud. Most people would say “an AWS Account has many VPCs”, using the acronym and never its full name.

You can think of VPCs are being defined by their regions and private IP CIDR blocks, which circumscribes the IP address space that each VPC can span, and from which it can allocate addresses. A private IPv4 CIDR block looks like 10.0.0.0/24. The largest block size allowed by AWS is /16.

$$\rm{VPC} := (\rm{CIDR}, \rm{Region})$$

Each VPC resides within a single region.

Should I always use the largest allowed CIDR block i.e. 10.x.0.0/16?

#

You may have multiple VPCs, or need multiple VPCs in the future. It is easier to manage inter-VPC connectivity if their CIDRs do not overlap. It is possible and feasible to setup inter-VPC connectivity even with overlapping CIDRs, but those techniques are more complex. See https://serverfault.com/a/636524 for a more in-depth recommendation.

That said, the $x$ in 10.x.0.0/16 can take $2^8 = 256$ possible values, which let’s you create 256 non-overlapping VPCs. That is a lot of VPCs. If you find yourself needing more, you might want to look into IPv6.

VPCs, Public Subnets, and Private Subnets #

A VPC has many subnets. You can think of subnets as being defined by their sub-range of the VPC’s CIDR block, and their availability zones. (We will go into availability zones later.)

Different subnets can be routed differently, which lets us reason about all sorts of subnet properties. Most of the time, we use them to segment our AWS resources, so we can reason about network security by thinking about what is reachable from a given point, and what is not.

$$\rm{Subnet} := (\rm{VPC}, \rm{SubCIDR}, \rm{AZ})$$

Each subnet resides within a single availability zone.

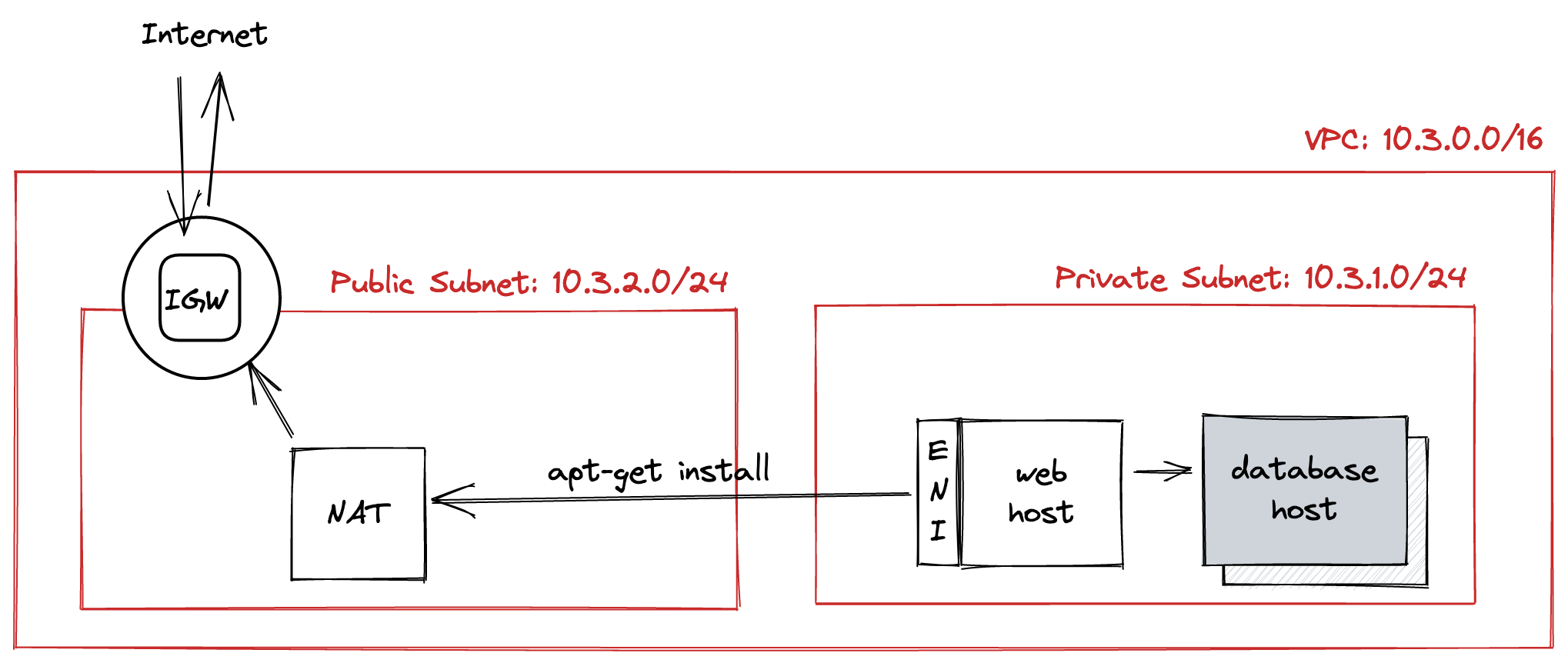

A subnet that has a route to an Internet Gateway (IGW) is known as a public subnet. These subnets can reach the Internet directly, and can be reached from the Internet directly. AWS resources provisioned inside public subnets each get their own public IP address, and the IGW helps IP packets find these resouces.

Subnets that do not have routes to IGWs are known as private subnets. These subnets cannot reach the Internet directly, cannot be reached from the Internet directly, and cannot use public IP addresses.

Sometimes, you want resources provisioned inside private subnets to be able to reach the Internet (say, to run apk install). The most common solution is to deploy a NAT Gateway in some public subnet, and then route traffic from private subnets through it. NAT Gateways cost $0.045 per GB.

Net. 1: Segmenting public and private subnets inside a VPC.

Memory Tip: Gateways let traffic in or out of your VPC.

Subnets and Elastic Network Interfaces #

Elastic Network Interfaces (ENIs) are like network cards that are provisioned in the cloud. Each one usually has a MAC address, and you can see them from your EC2 instance by running ip addr and looking for eth or ens.

$ ip addr

<...>

1: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9001 <...>

link/ether df:30:14:29:9b:2e brd ff:ff:ff:ff:ff:ff

^^^^^^^^^^^^^^^^^ <--- MAC address

inet 10.0.3.10/24 brd 10.0.3.255 scope global dynamic eth0

^^^^^^^^^^^^ <--- IPv4 address and subnet mask

<...>

These network interfaces can have private IP addresses and public IP addresses. ENIs are attached to anything in AWS that have IP addresses. I am not going to include them in any of the diagrams, because most of the time you do not have to worry about ENIs. They are almost always implicitly created when you provision some other resource, such as an EC2 instance, an ECS task, or a NAT Gateway.

Subnets and Route Tables #

A VPC has many Route Tables. Route Tables can be associated with zero or more subnets. Route Tables describe how IP packets are routed out of an associated subnet. (Think egress.)

The following example describes some of the rows you might find in a route table associated with a public subnet. The subnet here is part of a 10.3.0.0/16 VPC.

| Destination | Target | Explanation |

|---|---|---|

::/0 | igw-090ff1ce | Routes IPv6 packets to the internet gateway for the Internet. |

0.0.0.0/0 | igw-090ff1ce | Routes IPv4 packets to the internet gateway for the Internet. |

10.3.0.0/16 | local | Allows subnets to reach other subnets; these IP packets are routed within the VPC and do not use any of the gateways. |

Private Subnets, Public Load Balancers, and Bastions #

Circling back to why we have subnets: most of the time, we use them to segment our AWS resources, so we can reason about network security by thinking about what is reachable from a given point, and what is not.

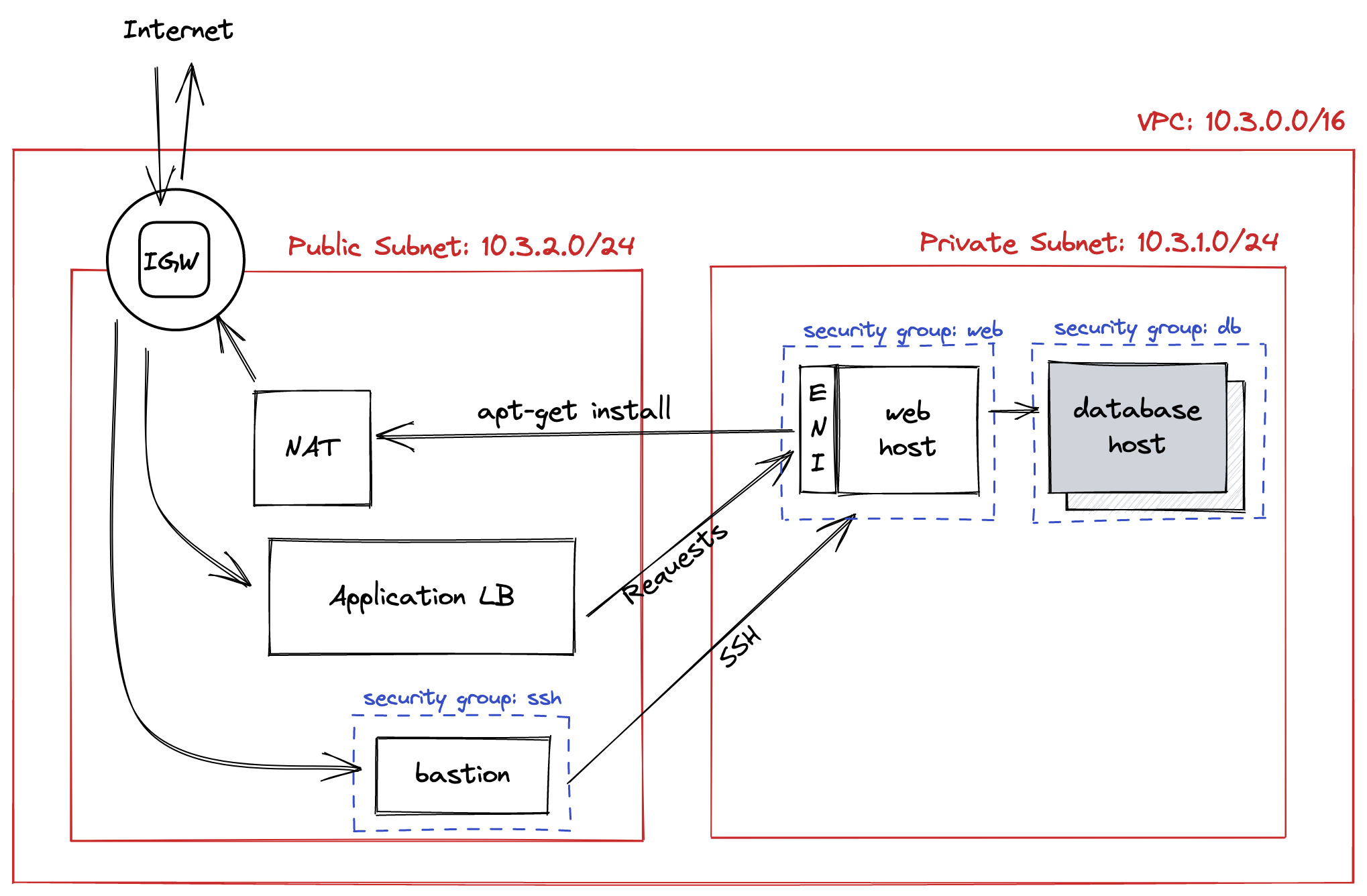

Usually, we like to provision most of our assets (e.g. web servers, databases, caches) inside shared or separate private subnets, so that these are not directly reachable from the Internet. In this setup, we would also provision Internet-facing load balancers in the public subnet, and forward traffic to web servers that are in the private subnets. (See How to Segment the Data Center for more.)

Memory Tips

- ELB v1 is the same as Classic ELB or Classic Load Balancer. This is no longer preferred.

- ELB v2 is equivalent to Application Load Balancer or ALB. This does most of what you need, and has advanced routing features that can replace NGINX or Apache for some use cases.

- Network Load Balancer is also referred to as NLB.

To provide authenticated, limited access to assets inside private subnets, we often deploy jump hosts, also known bastions, in the public subnets. Engineers can tunnel through these bastions to get access to private subnets, most often using SSH.

More recently, Site-to-Site VPN is becoming a popular alternative, but that is a more advanced technique and I will not cover that in detail here. Interested readers can dive into Wireguard’s docs or Tailscale’s docs for more information.

Net. 2: Using load balancers and bastions.

Security Groups #

A VPC has many Security Groups. Most resources inside the VPC that have network interfaces can be added to one or more security groups. For example, we have a security group named web for all of our web servers, and another named database for all of our RDS databases.

A security group is like a firewall: it controls traffic that can enter and leave by maintaining lists of allowed sources and destinations. However, security groups have a feature that most firewalls do not: it can allow or deny traffic by naming other security groups, which removes the need to use static IP addresses in these lists.

In the example above, we can configure database to allow TCP traffic from web on port 3306. Then, any web server that is inside web can reach our databases port 3306, without worrying about how their IP addresses are changing as we scale-in or scale-out.

VPC Peering and PrivateLink #

Suppose we have 2 VPCs using 10.3.0.0/16 and 10.4.0.0/16. Notice that these CIDRs do not overlap. If we want to allow any subnet in the former to reach any subnet in the latter, we can peer the two VPCs. This is common in multi-region setups.

You can imagine that the route table for 10.3.0.0/16 would include:

| Destination | Target | Explanation |

|---|---|---|

10.3.0.0/16 | local | intra-VPC |

10.4.0.0/16 | pcx-peering-connection | inter-VPC |

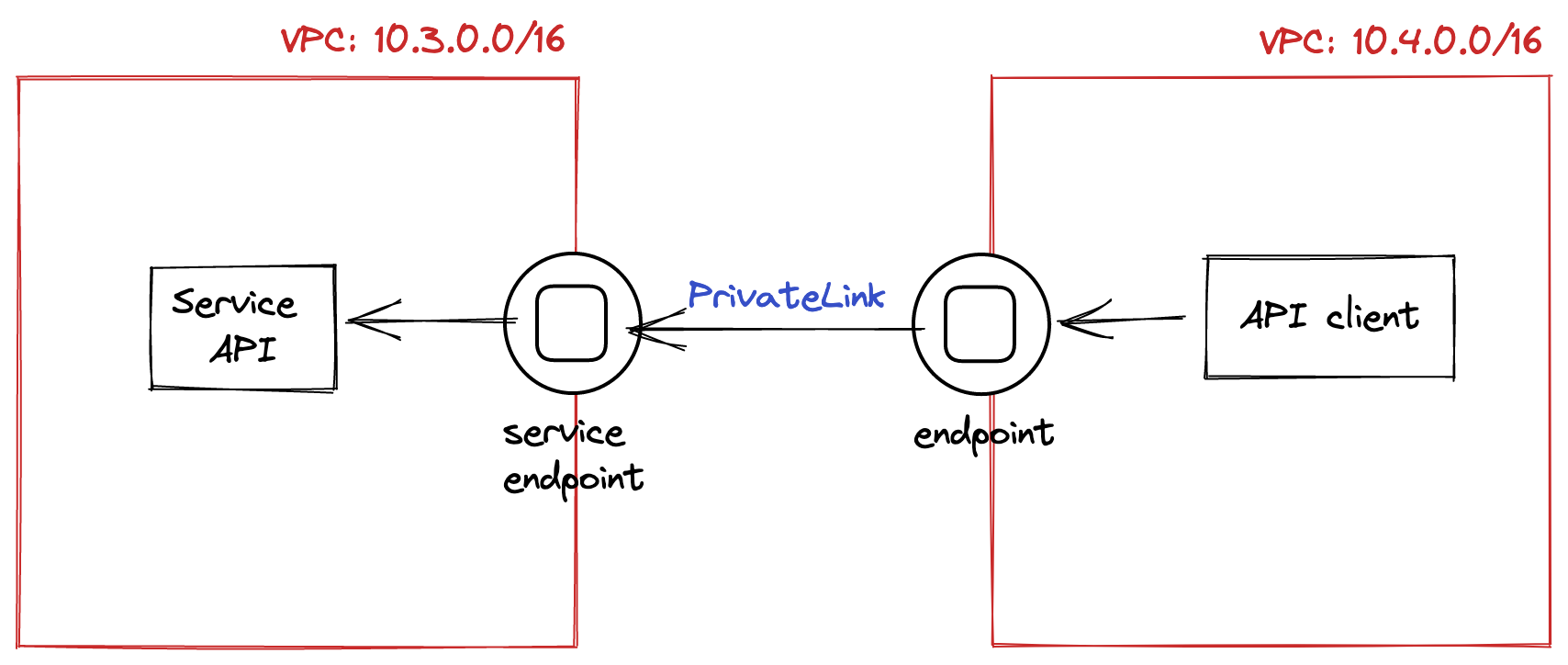

Sometimes, we want to expose specific services to another VPC, without exposing all of our subnets. We can do this using PrivateLink:

- In our VPC, we would create a endpoint service and point this at our internal service. The endpoint service has an ID.

- In the peer VPC, we would create a VPC endpoint and bind it to the above endpoint service using its ID.

Net. 3: Connecting 2 VPCs using PrivateLink.

If we are in the same region, the traffic between the VPC endpoint and the endpoint service stays within AWS’s data centers. This is the “private” part of PrivateLink.

Aside: Regions, and Availability Zones #

When you buy resources from AWS, you always have to answer the question: where do you want it?

This “where” takes 2 pieces of information:

- region

- availability zone

You can think of regions as data centers. For example, AWS has data centers in Oregon and Tokyo,

and they are available for your use as us-west-2 and ap-northeast-1 respectively.

You can think of availability zones (AZs) as buildings within the data center. Different data centers have different numbers of buildings, and these change from time to time as AWS invests in infrastructure.

Generally, hardware within a single AZ have correlated failures (imagine that they share the same power supply), and hardware across AZs are isolated. However, it is understood that AWS deploys to many AZs in the same region simultaneously, which causes region-wide failures.